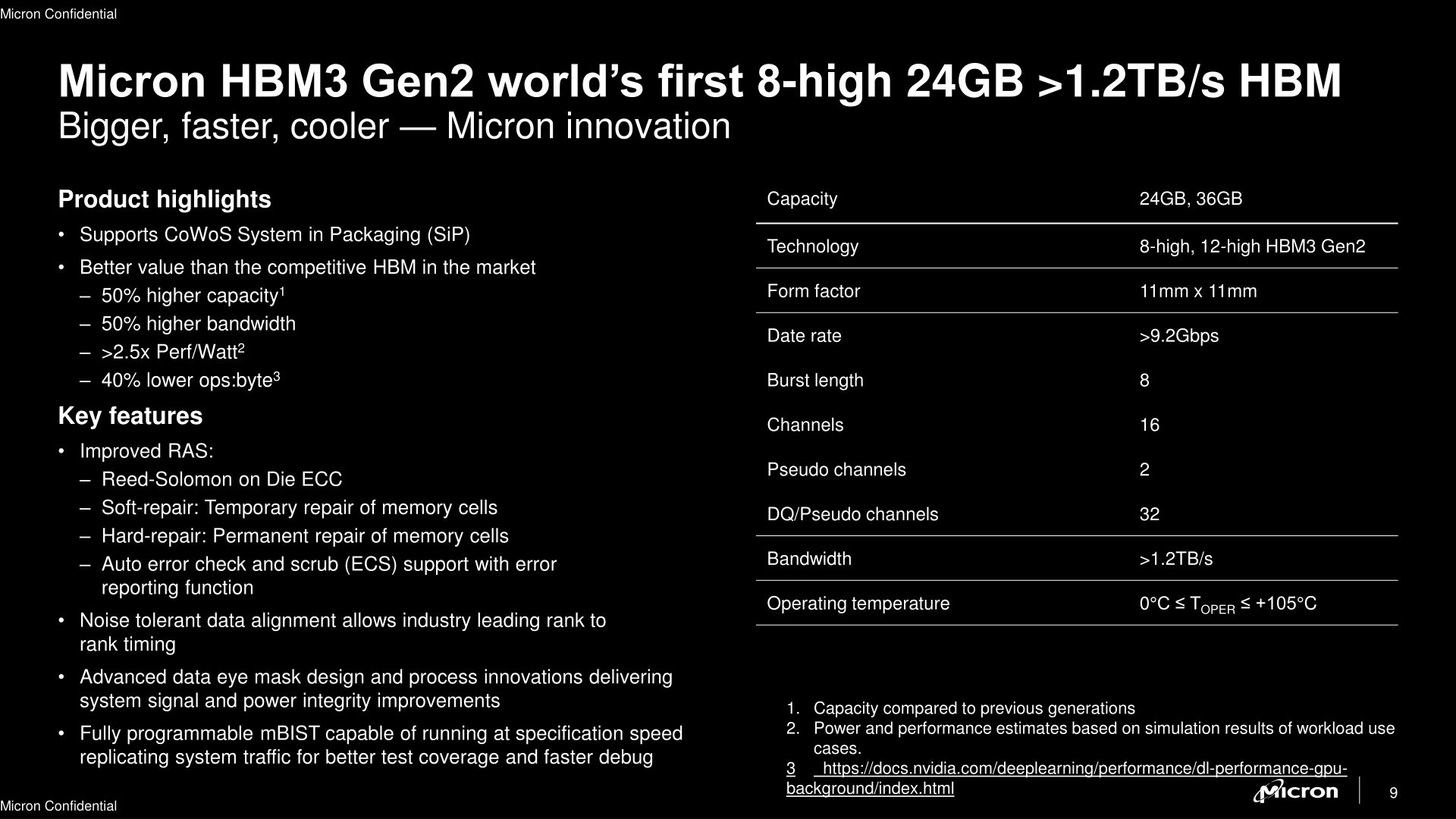

Micron has just announced its new HBM3 Gen2 memory, now sampling to its customers, with the world's fastest aggregate bandwidth of 1.2TB/sec, as well as the highest-capacity 8-high stack at 24GB... with 36GB on the away. Micron says that its new HBM3 Gen2 memory is its most power-efficient yet, with a huge 2.5x improvement-per-watt compared to its previous-gen HBM2e memory.

The company is shifting from its HBM2e memory directly to HBM3 Gen2, skipping over HBM3, with Micron explaining the naming of "Gen2" to "represent the generational leap in performance, capacity, and power efficiency".

Meanwhile, Micron is also teasing "HBMNext" on its roadmap, which could be a subtle tease of next-gen HBM4 memory, which will arrive in 2026 with over 2TB/sec of memory bandwidth, and capacities of up to 64GB. This won't be inside of your next-gen GPU inside of your next-gen Gaming PC, but rather, will be used on higher-end AI-powered systems and the like.

Micron's new HBM3 Gen2 is drop-in pin compatible with HBM3, so GPU makers can shift to the new HBM3 Gen2 memory standard easily. Micron has 8-stacked 24Gb die that provides a 50% capacity increase over similar 8-high HBM3 stacks, where HBM3 tops out at 24GB on a 12-high stack, Micron can fit its new HBM3 Gen2 memory in the same 11mm x 11mm footprint as regular HBM3, which is a decent density increase.

But... the tease of HBM3 Gen2 in an upcoming 12-high stack with 36GB of HBM3 Gen2 memory is "coming soon", meaning that something like an AMD Instinct MI300 accelerator would have access to an incredible 288GB of super-high-speed VRAM, while the regular 6-high stack on something like NVIDIA H100 GPUs would have access to 216GB of VRAM.

Micron is using uses the same 1β (1-beta) process node that it uses for its DDR5 memory on its new HBM3 Gen2 memory, with density and power efficiency improvements in tow. The company says its new HBM3 Gen2 memory has a 2.5x improvement in performance-per-watt over its current-gen HBM2e memory, and over 50% improvement in Pj/bit (picojoules a bit) over the previous generation.

Micron's new HBM3 Gen2 is currently sampling to partners for 8-high stacks, while 12-high stacks are coming in the near future, high volume production kicks off in 2024.